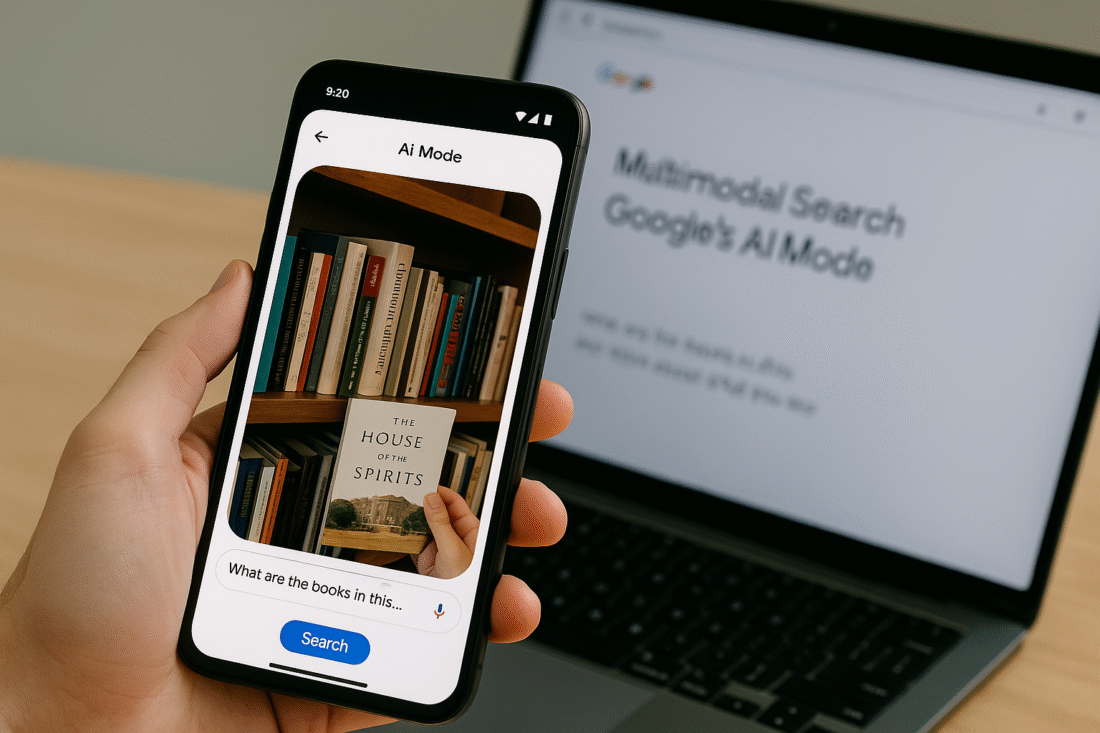

Google is enhancing its experimental AI Mode by introducing multimodal search capabilities, powered by Google Lens and Gemini (blog.google).

Snap, Search, Ask

- You can now take a photo or upload an image.

- Ask questions about what you see.

- Get a rich, contextual answer with smart links—like identifying book titles on your shelf and discovering similar options (blog.google).

Gemini + Lens = Smarter Imaging

- Gemini processes the entire visual scene, understanding objects, materials, colors, spatial relationships.

- Lens pinpoints each item in the image.

- Using a technique called query fan-out, AI Mode conducts multiple searches—about the scene and individual objects—for a more nuanced response (blog.google).

What Users Say

- Early feedback from Google One AI Premium users highlights AI Mode’s clean UI, fast replies, and ability to handle complex, exploratory queries like product comparisons or planning travel (blog.google).

- Compared to classic search, AI Mode queries are much longer and more detailed—perfect for deep-learning sessions.

Where to Try It

- AI Mode is now rolling out to millions of Search Labs users in the U.S.—you can sign up via Google Labs inside the Google app (iOS/Android) (blog.google).

- Google continues to refine the experience based on user feedback.

Why This Matters

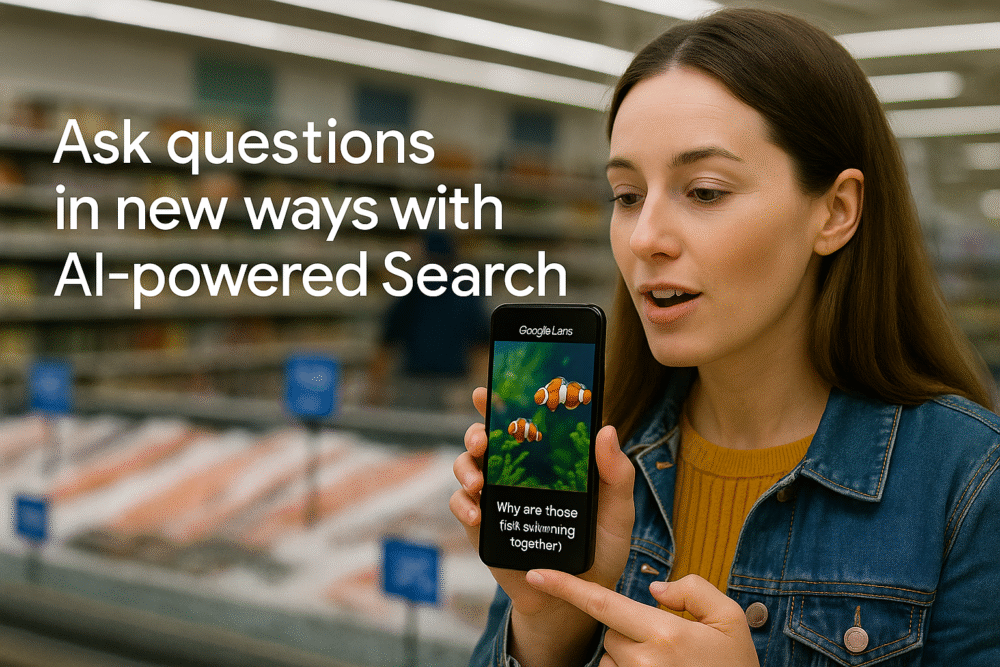

- Moves search from keyword-driven to image-aware and context-rich.

- Enables new possibilities: ask about what you see and instantly get recommendations—like finding similar books with ratings and purchase links.

- Supports follow-up queries, allowing deeper exploration without restarting your search (blog.google).

In Summary

Google’s AI Mode now supports visual search alongside text and voice, to deliver intelligent, multimodal insights. Whether you’re identifying products, studying a scene, or comparing items, the system adapts—making search more intuitive and powerful.

To experience it firsthand, explore AI Mode in Search Labs and let Google know how it’s working for you.